Using MLIS and Third-Party Models in GenAI Notebooks

This guide demonstrates how to use MLIS and third-party models through a RESTful API endpoint within a GenAI Studio notebook.

Before You Start #

Before you start, you’ll need to install the Lore SDK and initialize a client and gather the following:

- API key for the third-party model

- Base URL of the inference endpoint

- Model name

- Python environment with necessary libraries installed

- Access credentials for the GenAI Studio API (username, password, address, port number, workspace name, etc.)

Step-by-Step Guide #

Set Up the Environment #

Make sure your Python environment is equipped with all the necessary dependencies and libraries.

Configure the API Key and Endpoint #

Replace the placeholder values with your actual API key, base URL, and model name to get started.

from lore.types import enums as bte

API_KEY = "your_actual_api_key_here"

lore.load_model_with_openai_protocol(

base_url="https://api.example.com/v1/models/generative-model",

model="example_model",

api_key=API_KEY,

# We support OpenAI's chat completion and completion endpoint types

endpoint_type=bte.OPENAI_PROTOCOL_ENDPOINT_TYPE.CHAT_COMPLETION,

)Generate Model Outputs #

Now, you’re ready to create! Generate outputs based on your input prompts. For example, here’s how to generate a simple response.

out = lore.generate(

"What is a llama model?",

generation_config={

"temperature": 0.7,

"max_tokens": 1000,

},

)

print(out)Run Generate on a Dataset #

Follow these steps to leverage the model on your dataset.

- Prepare the dataset: Load your dataset into a DataFrame or similar structure.

- Define a prompt template: Craft a template for the prompts to be generated for each dataset entry.

- Generate outputs on the dataset: Use the model to create responses for each dataset entry.

from lore.types import base_types as bt

import pandas as pd

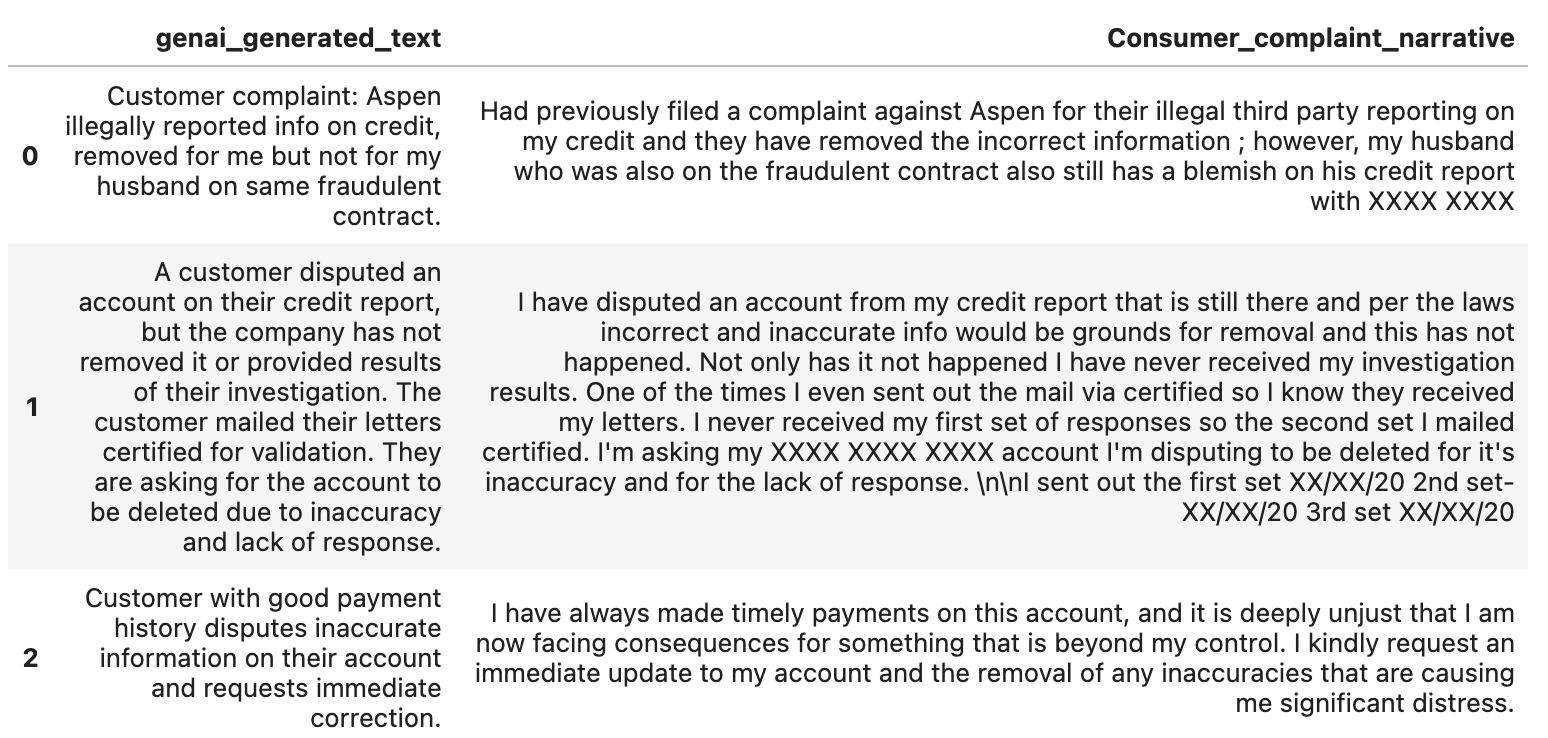

# Example dataset (replace with your actual dataset)

data = {

"Consumer_complaint_narrative": [

"Had previously filed a complaint against Aspen for their illegal third party reporting on my credit and they have removed the incorrect information; however, my husband who was also on the fraudulent contract also still has a blemish on his credit report with XXXX XXXX",

"I have disputed an account from my credit report that is still there and per the laws incorrect and inaccurate info would be grounds for removal and this has not happened. Not only has it not happened I have never received my investigation results. One of the times I even sent out the mail via certified so I know they received my letters. I never received my first set of responses so the second set I mailed certified. I'm asking my XXXX XXXX XXXX account I'm disputing to be deleted for its inaccuracy and for the lack of response."

]

}

df = pd.DataFrame(data)

# Define the prompt template

prompt_template = bt.PromptTemplate(

template="Summarize the following customer complaint in less than 20 words: {{Consumer_complaint_narrative}}"

)

# Generate outputs on the dataset

out_dataset = lore.generate_on_dataset(

dataset=df, prompt_template=prompt_template, number_of_samples=10

)Generate Outputs on a Saved Dataset #

Generate outputs on a saved dataset with ease by following these steps:

from lore.types import base_types as bt

# Get saved dataset from GenAI

dataset = lore.get_dataset_by_name("<your_dataset_name>")

# Define the prompt template

prompt_template = bt.PromptTemplate(

template="Summarize the following customer complaint in less than 20 words: {{Consumer_complaint_narrative}}"

)

# Generate outputs on the dataset

out_dataset = lore.generate_on_dataset(

dataset=dataset, prompt_template=prompt_template, number_of_samples=10

)

# Sample dataset to get rows

sample = lore.sample_dataset(out_dataset, number_of_samples=10)

Handle API Rate Limits #

Avoid running into API rate limits by implementing a delay between requests or processing your dataset in smaller batches. Here’s how:

import time

# Function to generate outputs with delay

def generate_with_delay(dataset, prompt_template, delay=1, number_of_samples=10):

for i in range(0, len(dataset), number_of_samples):

batch = dataset[i:i+number_of_samples]

lore.generate_on_dataset(

dataset=batch, prompt_template=prompt_template, number_of_samples=number_of_samples

)

time.sleep(delay)

# Example usage

generate_with_delay(df, prompt_template, delay=1, number_of_samples=10)