Connect HPE Machine Learning Data Management to Weights and Biases to track your data science experiments. Using HPE Machine Learning Data Management as our execution platform, we can version our executions, code, data, and models while still tracking everything in W&B.

Here we’ll use Pachyderm to manage our data and train our model.

Before You Start #

- You must have a W&B account

- You must have a HPE Machine Learning Data Management cluster

- Download the example code and unzip it. (or download this repo.

gh repo clone pachyderm/docs-contentand navigate todocs-content/docs/latest/integrate/weights-and-biases)

How to Use the Weights and Biases Connector #

- Create a HPE Machine Learning Data Management cluster.

- Create a W&B Account

- Copy your W&B API Key into the

secrets.jsonfile. We’ll use this file to make a HPE Machine Learning Data Management secret. This keeps our access keys from being built into our container or put in plaintext somewhere. - Create the secret with

pachctl create secret -f secrets.json - Run

make allto create a data repository and the pipeline.

About the MNIST example #

- Creates a project in W&B with the name of the HPE Machine Learning Data Management pipeline.

- Trains an MNIST classifier in a HPE Machine Learning Data Management Job.

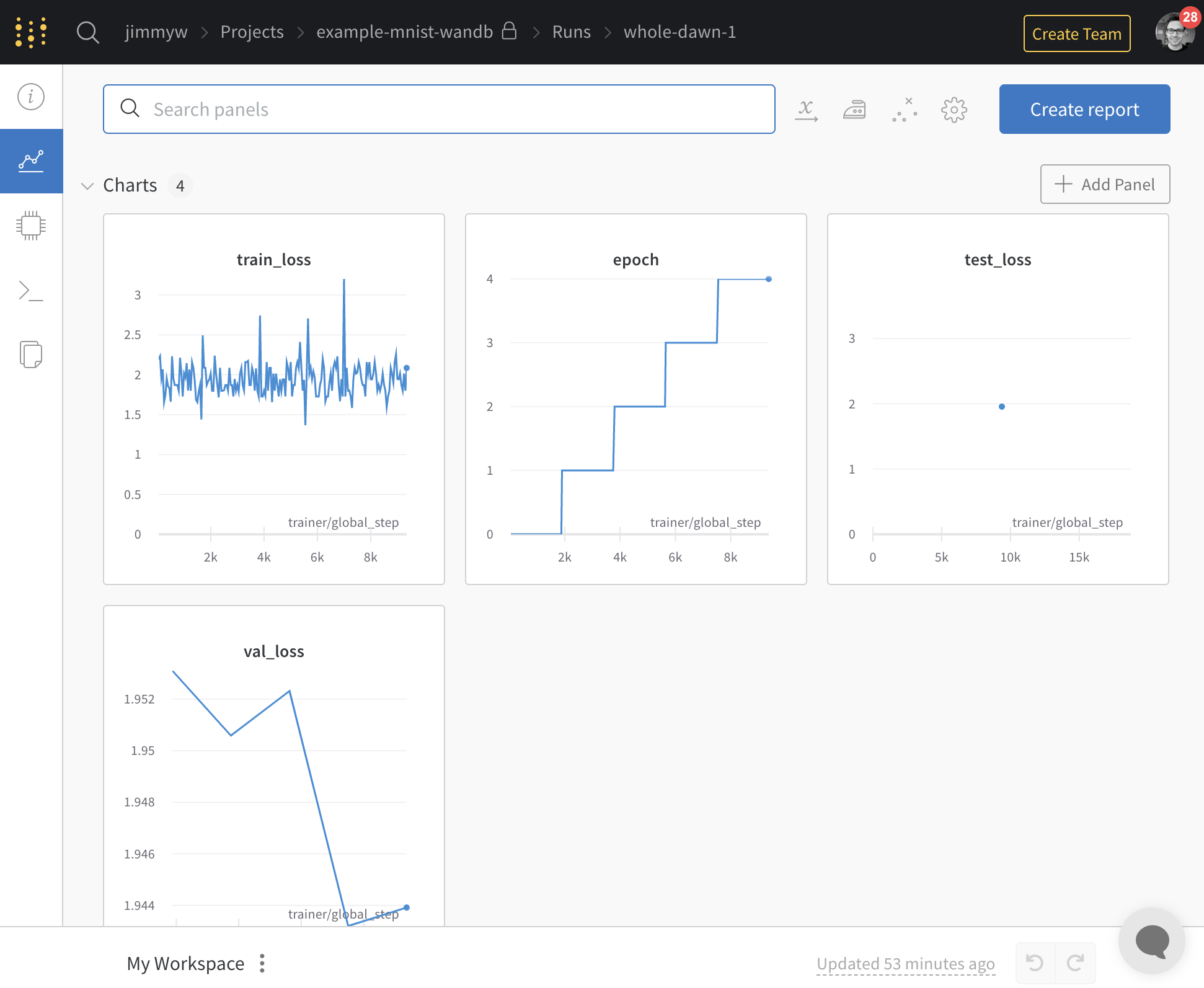

- Logs training info from training to W&B.

- If the Data or HPE Machine Learning Data Management Pipeline changes, it kicks off a new training process.